-

Notifications

You must be signed in to change notification settings - Fork 10k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Instructions for setup and running on Mac Silicon chips #25

Comments

|

I heard that pytorch updated to include apple MDS in the latest nightly release as well. Will this improve performance on M1 devices by utilizing Metal? |

|

With Homebrew brew install python3@3.10

pip3 install torch torchvision

pip3 install setuptools_rust

pip3 install -U git+https://github.com/huggingface/diffusers.git

pip3 install transformers scipy ftfyThen start StableDiffusion is CPU-only on M1 Macs because not all the pytorch ops are implemented for Metal. Generating one image with 50 steps takes 4-5 minutes. |

|

Hi @mja, thanks for these steps. I can get as far as the last one but then installing transformers fails with this error. (the install of setuptools_rust was successful ) for context the first step failed to install python3.10 with brew so I did it with Conda instead. Not sure if having a full anaconda env installed is the problem |

|

Just tried the pytorch nighly build with mps support and have some good news. On my cpu (M1 Max) it runs very slow, almost 9 minutes per image, but with mps enabled it's ~18x faster: less than 30 seconds per image🤩 |

|

Incredible! Would you mind sharing your exact setup so I can duplicate on my end? |

|

Unfortunately I got it working by many hours of trial and error, and in the end I don't know what worked. I'm not even a programmer, I'm just really good at googling stuff. Basically my process was:

I'm sorry that I can't be more helpful than this. |

|

Thanks. What are you currently using for checkpoints? Are you using research weights or are you using another model for now? |

|

I don't have access to the model so I haven't tested it, but based off of what @filipux said, I created this pull request to add mps support. If you can't wait for them to merge it you can clone my fork and switch to the apple-silicon-mps-support branch and try it out. Just follow the normal instructions but instead of running |

|

I couldn't quite get your fork to work @magnusviri, but based on most of @filipux's suggestions, I was able to install and generate samples on my M2 machine using https://github.com/einanao/stable-diffusion/tree/apple-silicon |

|

Edit: If you're looking at this comment now, you probably shouldn't follow this. Apparently a lot can change in 2 weeks! Old commentI got it to work fully natively without the CPU fallback, sort of. The way I did things is ugly since I prioritized making it work. I can't comment on speeds but my assumption is that using only the native MPS backend is faster? I used the mps_master branch from kulinseth/pytorch as a base, since it contains an implementation for If you want to use my ugly changes, you'll have to compile PyTorch from scratch as I couldn't get the CPU fallback to work: # clone the modified mps_master branch

git clone --recursive -b mps_master https://github.com/Raymonf/pytorch.git pytorch_mps && cd pytorch_mps

# dependencies to build (including for distributed)

# slightly modified from the docs

conda install astunparse numpy ninja pyyaml setuptools cmake cffi typing_extensions future six requests dataclasses pkg-config libuv

# build pytorch with explicit USE_DISTRIBUTED=1

USE_DISTRIBUTED=1 MACOSX_DEPLOYMENT_TARGET=12.4 CC=clang CXX=clang++ python setup.py installI based my version of the Stable Diffusion code on the code from PR #47's branch, you can find my fork here: https://github.com/Raymonf/stable-diffusion/tree/apple-silicon-mps-support Just your typical Edit: It definitely takes more than 20 seconds per image at the default settings with either sampler, not sure if I did something wrong. Might be hitting pytorch/pytorch#77799 :( @magnusviri: You are free to take anything from my branch for yourself if it's helpful at all, thanks for the PR 😃 |

|

@Raymonf: I merged your changes with mine and so they are in the pull request now. It caught everything that I missed and it almost identical to the changes that @einanao made as well. The only difference I could see was in ldm/models/diffusion/plms.py einanao: Raymonf: I don't know what the code differences are, except that I read that adding .contiguous() fixes bugs when falling back to the cpu. |

|

Pretty sure my version is redundant (I also added a downstream call to .contiguous(), but forgot to remove this one)

…On Sun, Aug 21, 2022 at 1:49 AM, James Reynolds ***@***.***> wrote:

***@***.***(https://github.com/Raymonf): I merged your changes with mine and so they are in the pull request now. It caught everything that I missed and it almost identical to the changes that ***@***.***(https://github.com/einanao) made as well. The only difference I could see was in ldm/models/diffusion/plms.py

einanao:

def register_buffer(self, name, attr):

if type(attr) == torch.Tensor:

if attr.device != torch.device("cuda"):

attr = attr.type(torch.float32).to(torch.device("mps")).contiguous()

Raymonf:

def register_buffer(self, name, attr):

if type(attr) == torch.Tensor:

if attr.device != torch.device(self.device_available):

attr = attr.to(torch.float32).to(torch.device(self.device_available))

I don't know what the code differences are, except that I read that adding .contiguous() fixes bugs when falling back to the cpu.

—

Reply to this email directly, [view it on GitHub](#25 (comment)), or [unsubscribe](https://github.com/notifications/unsubscribe-auth/AZRBZPFKEKA7VV3IMIHZ743V2G7N3ANCNFSM56VLUFDA).

You are receiving this because you were mentioned.Message ID: ***@***.***>

|

|

@einanao Maybe not! How long does yours take to run the default seed and prompt with full precision? GNU time reports |

|

It takes me 1.5 minutes to generate 1 sample on a 13 inch M2 2022 |

|

I'm getting this error when trying to run with the laion400 data set:

Is this an issue with the torch functional.py script? |

|

Yes, see @filipux's earlier comment:

|

|

@einanao thank you. One step closer, but now I'm getting this: Here is my function:

|

For benchmarking purposes - I'm at ~150s (2.5 minutes) on each iteration past the first, which was over 500s after setting up with the steps in these comments. 14" 2021 Macbook Pro with base specs. (M1 Pro chip) |

|

This worked for me. I'm seeing about 30 seconds per image on a 14" M1 Max MacBook Pro (32 GPU core). |

What steps did you follow? |

|

@henrique-galimberti I followed these steps:

|

|

mps support for aten::index.Tensor_out is now in pytorch nightly according to Denis |

|

Looks like there's a ticket for the reshape error at pytorch/pytorch#80800 |

Is that the pytorch nightly branch? That particular branch is 1068 commits ahead and 28606 commits behind the master. The last commit was 15 hours ago. But master has commits kind of non-stop for the last 9 hours. |

Where can I find the functional.py file ? |

For me the path is below. Your path will be different. Then replace |

It was merged 5 days ago so it should be in the regular PyTorch nightly that you can get directly from the PyTorch site. |

I also followed these steps and confirmed MPS was being used (printed the return value of

|

i've installed miniconda from repo. Then try to: python scripts/dream.py --full_precision python scripts/dream.py --full_precision |

|

I believe "osx-arm64" is for M1 Macs, if you have an Intel Mac that's the wrong version. I'd just try and install Miniconda from the website and use that, just in case that's the issue. |

|

@AntonEssenetial @hawtdawg is correct. Also, I believe your Try removing it first with: Then because you are on Intel, you should try modifying the command to be:

I'm not sure if someone has made a more complete guide for Intel Macs, but the default instructions on lstein may not work for you right now. I anticipate the |

I got the lstein development branch to work on my Intel Mac without changing anything, with the only exception being installing Conda for Intel instead of Arm. So I think this is the only thing that needs to be done, and everything is the same from there on. |

That would be great 😌. |

|

ldm is active but. python scripts/dream.py --full_precision

|

Do you have the model file at |

|

@Birch-san I there is a bug in your birch-mps-waifu branch that means txt2img_fork.py picks the last sample file filename number and overwrites it (on each start). E.g. base_count is somehow one-off. Edit: ah, I see. It just uses |

|

@HenkPoley phew! I was scared for a moment there. since someone else reported the same thing, but I think they had also deleted some files themselves. recent stuff I've been working on is trying to optimize attention (e.g. trying matmul instead of einsum, trying the changes from Doggettx / neonsecret, trying opt_einsum, trying cosine similarity attention) but none of those ideas improved the speed. next thing I want to add is latent walks. I'm trying to do it without losing support for multi-prompt or multi-sample so it's a bit harder than copying existing code. also want to look at better img2img capabilities. |

|

fix underway for |

Thx, solved, file was damaged 🤦🏻♂️ |

|

Maybe someone knows how to switch to GPU on macbook pro with AMD Radeon Pro 5500M 4 GB, for some reason it runs on the CPU. (ldm) ➜ stable-diffusion git:(main) python scripts/dream.py --full_precision

|

|

That message doesn't mean it all runs on CPU just the some instructions, check activity monitor you'll see it using a big chunk of GPU |

thx |

|

Looks like PyTorch nightly 1.13.0.dev20220915 (or slightly earlier) fixes the 'leaked semaphore' problem (I might misattribute it to PyTorch). Or at least I haven't seen it in a while. |

|

btw, if you're running on a nightly build: beware that there's a bug with |

|

@Birch-san 👀 "Speed up stable diffusion by ~50% using flash attention" https://twitter.com/labmlai/status/1573634095732490240 ..might just be a CUDA thing, the way it (doesn't) have access to large caches. |

|

@HenkPoley oh wow! I'll have a look into it, but I think the point of Flash attention is using the hardware better. as such, I think there's only a CUDA version of it at the moment. I know there's one merged into PyTorch, but by default isn't even built. perhaps this required building PyTorch from source, enabling that build flag, and relies on having CUDA? will investigate. |

|

okay yeah, it uses HazyResearch's implementation, which is definitely CUDA-specific. |

|

Hello everyone! I've got both x86_64(Anaconda) and arm64(Miniforge3) Conda environment, but the arm64 one runs much slower than the X86 one. I have no idea how to speed up in the arm64 structure cause it's slow as cpu.I am using the same code, and I'm using M1 silicon chip. |

|

@AkiKagura This repository has never been changed to enable MPS so it is running on the CPU on Apple Silicon. Try another fork, I use this one Also don't use the pytorch nightlies they've totally tanked torch.einsum performance recently, stick with the current stable |

|

@Vargol In fact, I am using a version of SD that has been modified to adapt mps support, and it could generate a picture in about 3-4 minutes on the x86 framework(but on arm64 it’s much slower). |

|

For comparison I'm on a 8Gb Mac Mini and despite it still causing a little swap usage now and again I get 4 it/s so I understand its significantly faster with 16Gb+. M1 Max @ 64Gb should take ~ 30 seconds per image for the 50 sample 512x512 image plus the model loading time according to the benchmarks I've seen. |

|

@AkiKagura M1 Max with 64GB RAM. The time is 26-27s for 50 steps. It was 30s, but an extra optimization was added a while ago in https://github.com/invoke-ai/InvokeAI I suggest you use that repo in you are on M1 because a lot of people are there, collaborating, and it has many features, such as inpainting, outpainting, textual inversion, as well as allowing to generate large images (e.g. 1024x1024 and beyond) without out-of-memory problems, etc. Also I suggest you check out @Birch-san 's repo. |

- Change occurrences (hardcodings, default arguments) of "cuda" to accept other torch devices ("mps", "cpu")

- Auto-detect and set torch device when running on appropriate hardware

- Don't use unsupported autocast when running on MPS, and always use full-precision (float32)

- Port CrossAttention optimizations from InvokeAI stable diffusion frontend, which dramatically speed up inference

- Fix seed instability caused by torch.randn not using the global seed on MPS hardware

- Various other bugfixes from (CompVis/stable-diffusion#25)

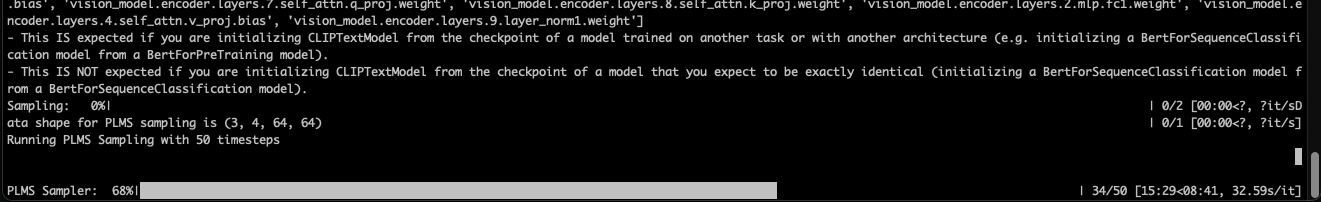

I'm using stable-diffusion on a 2022 Macbook M2 Air with 24 GB unified memory. I see this taking about 2.0s/it. I've moved many deps from pip to conda-forge, to take advantage of the precompiled binaries. Some notes for Mac users, since I've seen a lot of confusion about this: One doesn't need the `apple` channel to run this on a Mac-- that's only used by `tensorflow-deps`, required for running tensorflow-metal. For that, I have an example environment.yml here: https://developer.apple.com/forums/thread/711792?answerId=723276022#723276022 However, the `CONDA_ENV=osx-arm64` environment variable *is* needed to ensure that you do not run any Intel-specific packages such as `mkl`, which will fail with [cryptic errors](CompVis/stable-diffusion#25 (comment)) on the ARM architecture and cause the environment to break. I've also added a comment in the env file about 3.10 not working yet. When it becomes possible to update, those commands run on an osx-arm64 machine should work to determine the new version set. Here's what a successful run of dream.py should look like: ``` $ python scripts/dream.py --full_precision SIGABRT(6) ↵ 08:42:59 * Initializing, be patient... Loading model from models/ldm/stable-diffusion-v1/model.ckpt LatentDiffusion: Running in eps-prediction mode DiffusionWrapper has 859.52 M params. making attention of type 'vanilla' with 512 in_channels Working with z of shape (1, 4, 32, 32) = 4096 dimensions. making attention of type 'vanilla' with 512 in_channels Using slower but more accurate full-precision math (--full_precision) >> Setting Sampler to k_lms model loaded in 6.12s * Initialization done! Awaiting your command (-h for help, 'q' to quit) dream> "an astronaut riding a horse" Generating: 0%| | 0/1 [00:00<?, ?it/s]/Users/corajr/Documents/lstein/ldm/modules/embedding_manager.py:152: UserWarning: The operator 'aten::nonzero' is not currently supported on the MPS backend and will fall back to run on the CPU. This may have performance implications. (Triggered internally at /Users/runner/work/_temp/anaconda/conda-bld/pytorch_1662016319283/work/aten/src/ATen/mps/MPSFallback.mm:11.) placeholder_idx = torch.where( 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 50/50 [01:37<00:00, 1.95s/it] Generating: 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 1/1 [01:38<00:00, 98.55s/it] Usage stats: 1 image(s) generated in 98.60s Max VRAM used for this generation: 0.00G Outputs: outputs/img-samples/000001.1525943180.png: "an astronaut riding a horse" -s50 -W512 -H512 -C7.5 -Ak_lms -F -S1525943180 ```

|

Does anyone know what commands will increase the resolution to photographic quality, like you get from the Stable Diffusion website, and if you can get more than one image at a time. This is the only command I have right now to define the output: |

|

@BeginAnAdventure In txt2img, stable-diffusion/scripts/txt2img.py Lines 163 to 174 in 21f890f

For more than one image at a time, you might have a bad time, but the option is actually But do consider using InvokeAI or some other UI - the scripts in this repository aren't exactly "feature complete". |

That's what I thought. I did try using this one: But I keep getting errors despite no issues with doing all in Terminal. Will check out InvokeAI, just wondering if I'll need to create a whole new setup for their UI. Thanks! |

Hi,

I’ve heard it is possible to run Stable-Diffusion on Mac Silicon (albeit slowly), would be good to include basic setup and instructions to do this.

Thanks,

Chris

The text was updated successfully, but these errors were encountered: